The Dead Are Campaigning. The Living Should Worry.

Politicians are fabricating deepfakes of themselves. The deceased are campaigning for their successors. And WhatsApp has become an ungovernable battlefield. India's AI election was the world's largest experiment in algorithmic democracy. Here is what political leaders, policymakers, and citizens must understand before it is too late.

ELECTIONSPOLITICSINDIAARTIFICIAL INTELLIGENCELEADERSHIPPOLITICAL STRATEGYCOMMUNICATION

Last year, I got an unusual request that I turned down. A politician, whose identity I’ll keep private, asked me to make a deepfake video of him making a mistake. His plan was to use it as a cover, so if any real embarrassing footage ever came out, he could claim it was just another fake. It’s striking that we’ve reached a point where people try to protect themselves from the truth by creating lies in advance.

This isn’t science fiction. This is the reality of Indian politics in 2025.

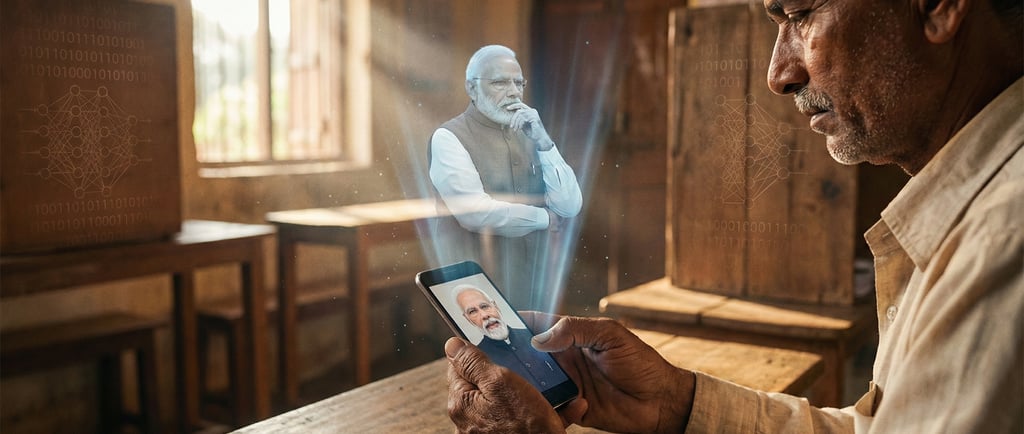

India, the world’s largest democracy, has also become the biggest testing ground for artificial intelligence in elections and government. In the 2024 general elections, political parties reportedly spent about ₹450 crores ($50 million) on AI-generated content. Some even brought back deceased politicians through deepfakes to support their successors. Prime Minister Modi’s speeches were instantly translated into many regional languages using the Bhashini platform in the NaMo app. At the same time, opposition parties made satirical deepfakes of Modi singing Bollywood songs about supposed crony capitalism.

No one was jailed or disqualified. The Election Commission of India issued only a weak advisory on deepfakes in May, long after the harm had been done.

What does this mean for democracy everywhere? And why should political leaders, policy experts, and citizens care about what’s happening in India?

The Two Faces of AI in Democracy

First, it’s essential to realise that AI in politics isn’t just one thing. It can have very different effects depending on who uses it and why.

One side is what I call democratising AI: tools that truly help more people take part in politics. For example, when someone in Odisha can listen to Modi’s Mann Ki Baat in perfect Odia, it helps connect people. If an AI chatbot helps a new candidate write a clear manifesto without needing costly consultants, it makes things fairer. When older party workers receive personalised WhatsApp videos thanking them by name for their years of service, it helps build stronger relationships on a large scale.

The BJP’s use of Bhashini to overcome language barriers in southern and eastern states, where Hindi isn’t widely spoken, is a clear example of AI promoting inclusion. In Tokyo, an independent candidate used an AI avatar to answer thousands of voter questions. In Pennsylvania, a congressional candidate used an AI volunteer named Ashley to call voters. These uses of AI aren’t necessarily harmful.

On the other hand, there’s weaponised AI: tools meant to deceive, manipulate, and break trust. For example, the Dravida Munnetra Kazhagam (DMK) used a deepfake of the late M. Karunanidhi to support his son’s campaign, crossing an ethical line since the deceased can’t give consent. This kind of emotional manipulation targets voters who remember Karunanidhi fondly. The Communist Party of India (Marxist) did something similar with Buddhadeb Bhattacharya. These aren’t tributes; they’re using the dead for political advantage.

There’s an even darker side. During the 2024 elections, an AI company founder said politicians asked him to put their opponents’ faces onto explicit content. Others wanted fake audio of rivals making offensive remarks. He said he refused, but it’s unclear how many others agreed to such requests.

The Liar’s Dividend: Why the Powerful Benefit Most

Here is the uncomfortable truth that few commentators even dare to discuss: in an era of ubiquitous deepfakes, the powerful gain more than the powerless.

Imagine this: a real video comes out showing a cabinet minister taking a bribe. Before AI, this would have been a massive scandal. Now, the minister can quickly claim, “This is a deepfake made by the opposition.” Most news outlets don’t wait for a detailed analysis, so the claim creates enough doubt for the minister to get by. This is called the liar’s dividend.

When anything can be faked, it’s hard to know what’s real. Guilty people can deny real evidence, and innocent people can be framed. Both damage public trust, but the guilty benefit more because they’re already used to avoiding blame.

In Romania, the 2024 presidential election was cancelled after evidence of AI-driven foreign interference, including fake videos. In India, even though deepfakes were everywhere, no election was annulled. Why? Because it’s almost impossible to prove that AI content changed the election result. Without clear proof, politicians use the liar’s dividend to their advantage.

WhatsApp: The Encrypted Battlefield

To really see how AI affects Indian elections, don’t focus on Twitter or Facebook. Pay attention to WhatsApp.

India has more than 535 million WhatsApp users, making it the biggest market for the app. During elections, political parties manage huge networks of WhatsApp groups, often using the phone numbers of people who don’t even know their identities are being misused. Because of end-to-end encryption, regulators, fact-checkers, and even Meta can’t see what’s being shared.

AI-generated content spreads widely in these encrypted spaces. There are voice clones of party leaders, fake polls with fabricated results, and audio clips of opponents making inflammatory remarks. Some content is meant to stir up religious divisions. By the time a deepfake is proven false, it’s already been shared thousands of times in family, religious, and work groups.

Meta promised to block manipulative AI content during India’s elections. But in reality, the company approved 14 AI-made election ads with supremacist language and calls for violence against minorities. The ads were shown, so the promises didn’t matter.

This is the situation political strategists face. WhatsApp can’t be controlled, and AI makes it even more dangerous. Together, they create a communication channel that no election commission can oversee.

From Governance to the Algorithmic State

Elections are just part of the picture. The bigger change is in how governments are operating.

India’s digital public infrastructure, built on Aadhaar, UPI, and DigiLocker, has created a foundation unlike any other large democracy. Nearly 90 crore Indians are now connected to the internet. The data they generate is astronomical. And AI is increasingly being deployed to make sense of this data for governance and political purposes.

The IndiaAI Mission has committed ₹10,300 crore over five years. State governments in Gujarat, Karnataka, Tamil Nadu, Maharashtra, Telangana, and Andhra Pradesh have launched AI governance initiatives. The National Data and Analytics Platform (NDAP) is designed to enhance AI-driven policymaking. CoWIN, which managed the world’s largest vaccination drive, used multilingual AI systems to address linguistic diversity.

But this is where political leaders need to be cautious. The next step isn’t just using AI to help bureaucrats. It’s about agentic AI: systems that can sense, think, and act with little human help.

Imagine an AI system that does not merely flag building permits that are overdue but automatically contacts applicants for missing information, conducts compliance checks, and issues approvals. That is not automation. That is the machine making decisions that previously required human judgement.

The Berlin Global GovTech Centre calls this the “agentic state” and says it’s as significant a change as the invention of modern bureaucracy in the 1800s. Bureaucracies are process-driven and follow rules, but the agentic state will focus on results, be more personalised, and adapt quickly. Instead of paper and patience, it will need data and algorithms.

This brings up big questions for political leaders. Who is responsible if an AI system rejects someone’s welfare application? What if predictive policing unfairly targets certain groups? How can elected officials oversee systems they don’t fully understand? Most importantly, how can citizens challenge a machine’s decision?

The Indian Paradox

India is at a unique crossroads. It has built a digital public infrastructure that other countries admire. The India Stack, Aadhaar for identity, UPI for payments, and DigiLocker for documents, is seen as a model for digital inclusion. But at the same time, India ranks 159th out of 180 countries in the World Press Freedom Index, and the Election Commission has faced criticism for failing to act on AI manipulation.

This contradiction is necessary. The same systems that empower citizens can also be used for government surveillance. The AI that translates the Prime Minister’s speeches can also spread propaganda. The data that helps target welfare can also be used to profile people who disagree with the government.

The V-DEM Report 2025 shows that autocracy has been rising worldwide for 25 years. It asks if democracy is losing ground. AI isn’t the leading cause, but it speeds up the process. Countries with weak institutions often use AI for surveillance, censorship, and repression rather than to help citizens. The big question for India is which path it will choose.

What Political Leaders Must Understand

I work with political leaders on communication strategy. Here’s what I tell them about AI:

First, disclosure will soon be required. The EU’s AI Act already demands transparency about AI-generated political content. India will probably do the same, but only after a major scandal forces action. Leaders who are open about using AI now will earn more trust in the future.

Second, authenticity is your edge. When anything can be faked, being real matters more than ever. Town halls, Pad Yatras, meeting voters directly, and unscripted moments all show authenticity that AI can’t easily copy.

Third, invest in AI literacy. Your team should know what AI can do, what’s ethical, and what’s legal. If your staff doesn’t understand AI, it’s a weakness. We’ve recently trained a few teams on this.

Fourth, create a plan for responding to deepfakes. When a deepfake of you appears, and it will, have a response ready. Acting quickly is crucial. The first 48 hours decide if the story takes hold.

Fifth, focus on governance, not just campaigns. AI’s role in government will last longer than its role in elections. The choices you make now about citizen data, algorithms, and automation will shape your legacy.

The Road Ahead

We’re at a turning point. The technology exists, but the rules don’t. Ethical guidelines are just beginning to take shape, and there are significant incentives to misuse AI.

India’s 2024 experience shows that fears that AI would ruin elections were exaggerated. No democracy fell apart, and no results were cancelled. Most deepfakes just became part of the usual political mudslinging. Voters turned out to be tougher than many expected.

But being resilient doesn’t mean being immune. Every election makes AI manipulation seem more normal. Every deepfake that isn’t punished encourages more. Every government that uses AI for surveillance without oversight sets an example for others.

The real question isn’t if AI will change politics and government. It already has. The question is whether we will guide that change or let it guide us. Will democratic values control how AI is used, or will AI weaken those values? Will India show that democracy can handle AI without losing itself?

I’m still cautiously optimistic. It’s not because I think politicians are always honest or regulators are always effective, but because citizens usually make good decisions when they have the facts. The same technology that makes deepfakes also helps detect them. The platforms that spread false information can also support fact-checking. The same AI that can be used to manipulate can also be used to empower.

What really matters is human choice. People like me must say no to unethical requests. Journalists need to keep high standards for checking facts. Voters should be sceptical, but not cynical. Political leaders must see that short-term tricks lead to long-term problems.

Chanakya understood this millennia ago: Before you start some work, always ask yourself three questions: Why am I doing it? What might the results be? Will I be successful? AI amplifies our choices. It does not make them for us.

At least, not yet.